Vellum

Vellum Product Information

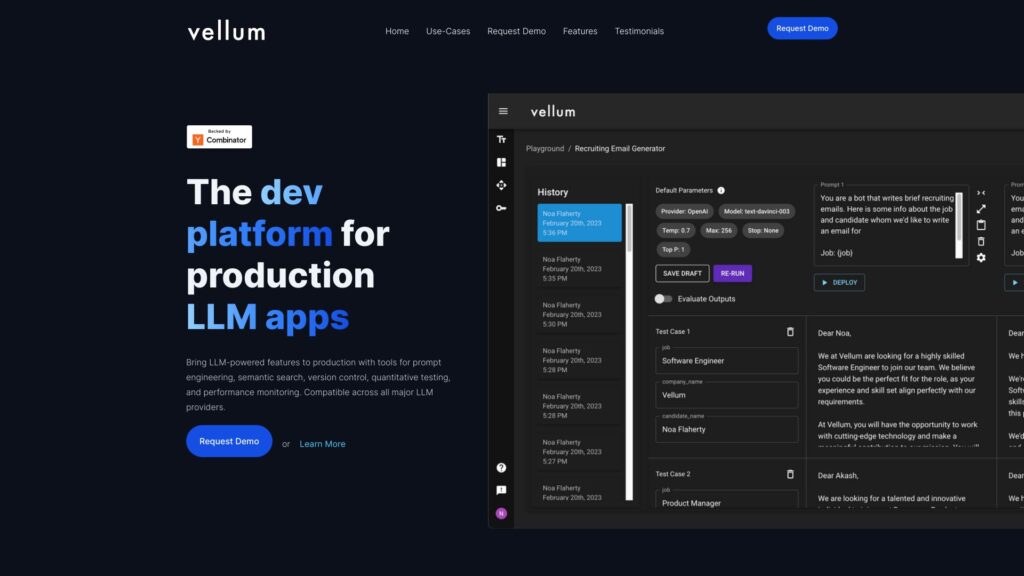

What is Vellum?

Vellum is the development platform for building LLM apps with tools for prompt engineering, semantic search, version control, testing, and monitoring. Compatible with all major LLM providers.

How to use Vellum?

Vellum provides a comprehensive set of tools and features for prompt engineering, semantic search, version control, testing, and monitoring. Users can use Vellum to build LLM-powered applications and bring LLM-powered features to production. The platform supports rapid experimentation, regression testing, version control, and observability & monitoring. It also allows users to utilize proprietary data as context in LLM calls, compare and collaborate on prompts and models, and test, version, and monitor LLM changes in production. Vellum is compatible with all major LLM providers and offers a user-friendly UI.

What can Vellum help me build?

Vellum can help you build LLM-powered applications and bring LLM-powered features to production by providing tools for prompt engineering, semantic search, version control, testing, and monitoring.

What LLM providers are compatible with Vellum?

Vellum is compatible with all major LLM providers.

What are the core features of Vellum?

The core features of Vellum include prompt engineering, semantic search, version control, testing, and monitoring.

Can I compare and collaborate on prompts and models using Vellum?

Yes, Vellum allows you to compare, test, and collaborate on prompts and models.

Does Vellum support version control?

Yes, Vellum supports version control, allowing you to track what’s worked and what hasn’t.

Can I use my own data as context in LLM calls?

Yes, Vellum allows you to use proprietary data as context in your LLM calls.

Is Vellum provider agnostic?

Yes, Vellum is provider agnostic, allowing you to use the best provider and model for the job.

Does Vellum offer a personalized demo?

Yes, you can request a personalized demo from Vellum’s founding team.

What do customers say about Vellum?

Customers praise Vellum for its ease of use, fast deployment, extensive prompt testing, collaboration features, and the ability to compare model providers.